The issue of AI has been in the headlines of major newspapers with all kinds of doom projections. Reference 1 from earlier this year has 11 areas that should create worry. These items include replacing humans a variety of jobs resulting in significant reductions in the work force. The one that is appropriate in today’s concerns in the impact on the environment.

AI can provide a help in establishing low emission infrastructure and other related efforts that can be assisted by increased algorithms that provide a better understanding of the activities impacting the environment. This sounds good, but, as with most things, there is a catch.

The training required for advanced AI models, quality data takes computing power to obtain and process. Employing the results to train a significant focused model requires energy consumption. Reference 2 has examples of training data size for models. OpenAI trained it GPT-3 on 45 terabytes if data. Microsoft trained a smaller system (less data) using 512 Nvidia GPU for nine days. The power consumption was 27,648 watts or enough energy to power 3 homes for a year. This was for a smaller model than GPT-3.

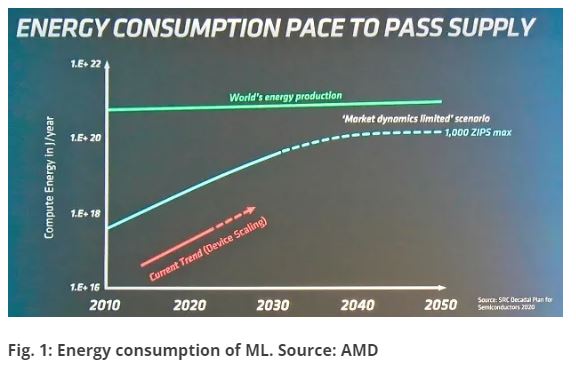

As the capabilities of the models increase the amount of data grows exponentially. Reference 3 has a graph, Figure 1, projects the Machine Learning systems will be pushing its total of the world power supply. The question of why the energy demand is growing so quickly is that more accurate models require more data. More accurate models generate more profitability.

There is another issue. The available semiconductor processing capability is a limiting factor. Therefore, more wafer fabs are required, which fabs in themselves, area a power consumption concern. The storage clouds are not exempt from this increase in power requirements. Reference 4 indicates that the power consumption of the computer racks in the storage center require 4 times as much power as a tradition CPU rack. There is work being done in this area to reduce the power requirements, but that reduction is being outstripped by the increase in data being processed.

What is the difference between the CPU and GPU racks that increase the power consumption? A CPU (Central Processing Unit) is the main controller for all the circuitry. It covers a variety of processes and runs processes serially with a number of cores that does not currently exceed 64. Most desktops have less than 12 cores. This unit is efficient at processing one task at a time. The GPU (Graphical Processing Unit) is specially designed to handle many smaller processes at a time, like graphics or video rendering. The core count is in the thousands to run processes in parallel.

This provides the specialized GPU with the ability to have a heavy load of processing without having to be concerned with the other tasks the computer needs to do. So, the circuitry does not include the capability of day-to-day operations, but has extra computational power directed at specific circuits.

The net result is that faster processing employs more power. That power must be generated somewhere. That increase in electricity generation raises concerns about the total impact on the environment. This is where AI becomes an environmental concern.

References:

- https://blog.coupler.io/artificial-intelligence-issues/

- https://www.techtarget.com/searchenterpriseai/feature/Energy-consumption-of-AI-poses-environmental-problems

- https://semiengineering.com/ai-power-consumption-exploding/

- https://flexpowermodules.com/ai-the-need-for-high-power-lev

- https://www.cdw.com/content/cdw/en/articles/hardware/cpu-vs-gpu.html#:~:text=The%20primary%20difference%20between%20a,based%20microprocessors%20in%20modern%20computers.